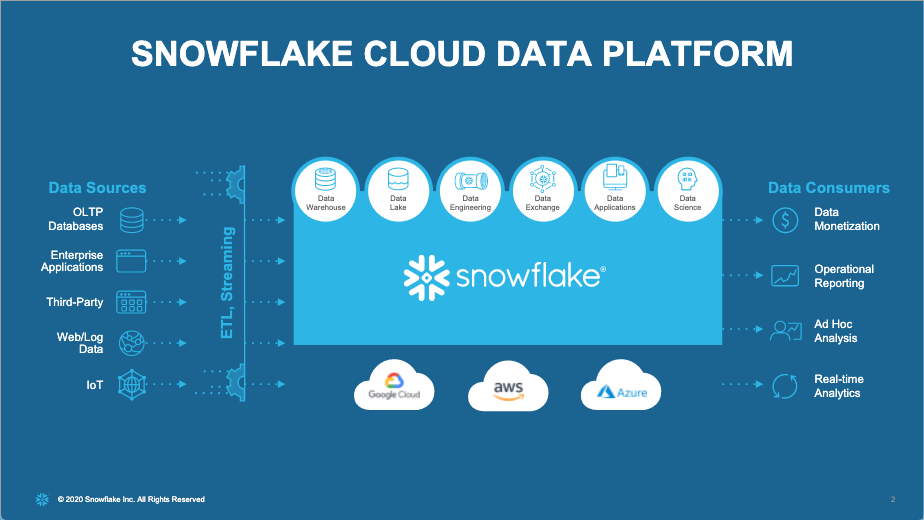

General Introduction

Key customers

7 Best Practices for Building Data Applications

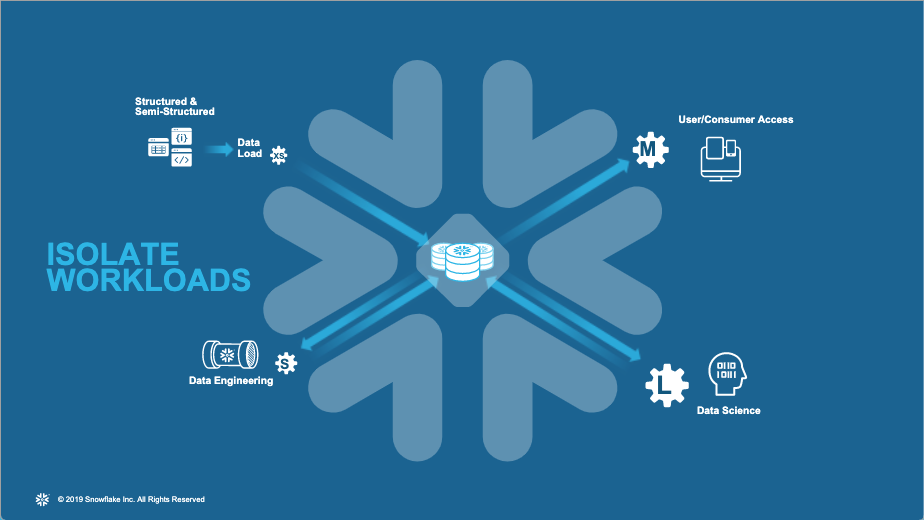

Isolation workloads with Snowflake

Key features

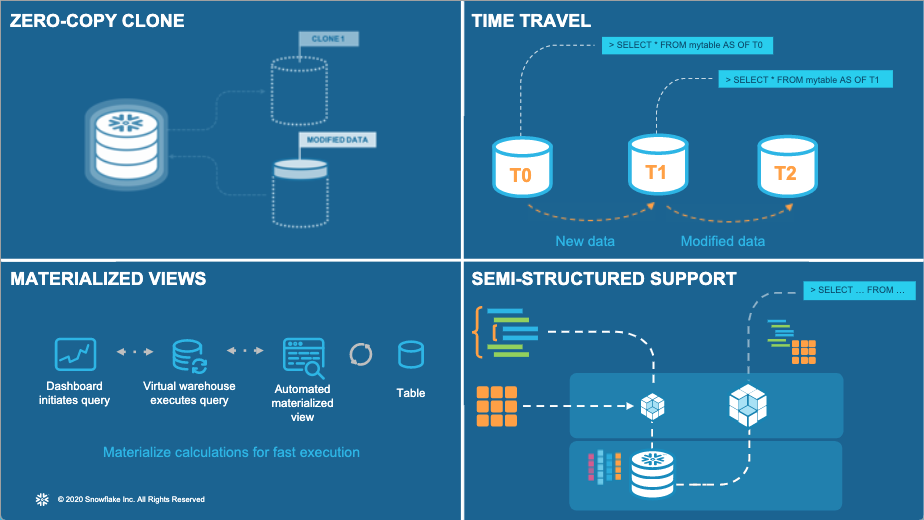

- Zero-Copy Clone

- Time Travel

- AS OF feature

- Semi-structured Support

- add timestamp column

- Materialized Views

- Snowflake materialized views is different with other platforms

Code in your Language

- snowflake connection diagnose system

- SnowPipe

Use the Ecosystem

Manage Cost

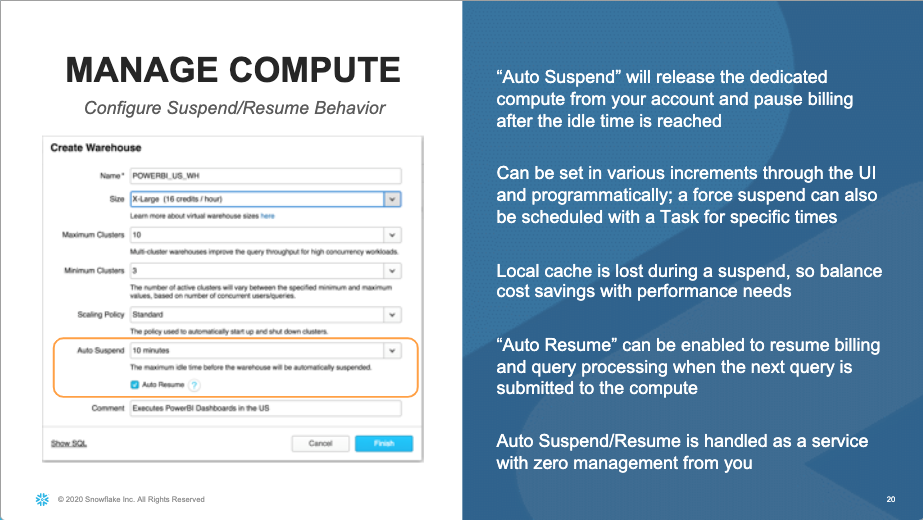

- Manage compute

- configure with

Auto Suspend - configure with

Auto Resume - configure suspend window after idle time window

- configure with

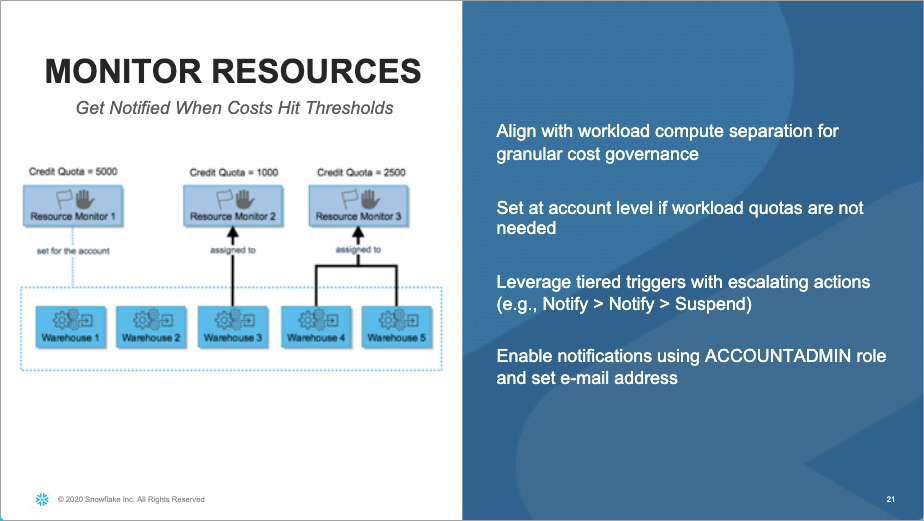

- Monitoring resources

- all data app utilise resource monitoring

FAQ

Q: Does Snowflake a transactional database?

A: Snowflake doesn’t have strong constraints like other typical databases.

referential integrity

Q: What is SnowPipe?

A: It’s a Data Ingestion tool from Snowflake ( click me ).

- serverless ingest data etl tool

- Snowflake will charge you some snowflake credits

Q: How Snowflake work with CI/CD pipeline?

A: With Snowflake, all the data copy or environment provision is just updating metadata, and things are much easier for developer.

Q: How does Snowflake handle incremental data?

A: Snowflake use append-only mode to update all the tables.

Q: How does Snowflake remove the operational burden?

A: Snowflake is a managed services by Snowflake and most of the operational work will be handle by Snowflake, e.g. backup, upgrade, load balancing for queries.

Q: How does Auto-Scaling work in Snowflake?

A: Snowflake have standard scaling policy, and also economic scaling policy, it will depend on customer’s preference.

Q: What are the business using Snowflake?

A:

- financial institution, with user behaviour log

- marketing campaign,

- customer provide data via API or data sharing feature

- data exchange between provider and customers

- security analytical services

- IoT data, trimble

- typical a BI application, but nowadays Snowflake is acting like a database + BI application.

Q: do you offer additional security component to address healthcare HIPAA compliance? please share details

A: Snowflake is HIPPA compliant platform. We have documentations that could be shared. Please reach out to the account team that can liaise with the security team and provide the requested certifications under the NDA.

Q: do you have AI/ML components to address data scientist needed on top of snowflake?

A: Yes. One of the most common workloads that run on Snowflake are Data Science workload. Snowflake has connectors for Spark, Python, R that could be used to help data scientist capture and process the data as part of data prep process and perform feature engineering operations. Snowflake then integrates with ML partners to deliver model build and deployment.

Q: I am a python coder, may I know in the deck when it shows Python “SQLAlchemy”, does it means we can only connect to Snowflakes via this ORM library, or we can directly issue raw query to Snowflakes and get results within Python code?

A: You could use Snowflake python library or Snowflake python pandas library to connect to Snowflake and execute push down data processing on Snowflake. More details here: https://docs.snowflake.com/en/user-guide/python-connector.html#snowflake-connector-for-python

Q: Do you plan to offer Vertical scaling of the Warehouse from Medium to Large (or) Large to XL? Pls let know whether there is any pattern based recommendation of Warehouse size based on the workloads.

A: Snowflake has on-demand vertical scaling of warehouses today. You can instantly scale up to any size warehouse based on your workload requirement. Today, we do not have any recommendation engine running in the background that would suggest the size of the warehouse based on the usage.

Q: What are the main factors to consider in deciding which size we need to set our warehouse for data ingestion ? Any quick tips/guidance ?

A: Snowflake provides you complete control on cost vs performance. You can execute your workload on a size of a warehouse and then scale up / down and get linear SLA scaling and decide on the right size based on your SLA requirement.

Q: can snowflake serves as datahub for other applications to extract data from, via API calls ? Essentially hosting ‘system API’ in Snowflake

A: Snowflake as a data hub is one of the most common ways to access data from Snowflake, specially in builder usecases. You will have to have an API server /. gateway that can send requests to Snowflake for data. Snowflake’s auto scale out and ability to handle concurrent connections can support such framework.

Q: Does Snowpipe recommended only for continuous data loads ?

A: It is typically built for that use case but ofcourse not restricted to the one.

going back to Snowpipe vs virtual warehouse, are you saying that for ingetion it really doent matter one over the other?

it does. Both have their use case, benefits and advantages over each other. Typically, you will use Snowpipe for Near Real Time ingestion where as COPY INTO through virtual warehouse for batch ingestion.

Q: Does Snowflake-Spark connector supports aggregations on Spark Data Stream..our requirement is after reading data using read stream..we need to find out latest records

A: If you load the data into Snowflake from your Spark stream, you can use Snowflake Stream to identify new / latest records. More details here: https://docs.snowflake.com/en/user-guide/streams.html#change-tracking-using-table-streams

Q: is snowflake zero copy cloning similar to data virtualization - is there any relationship between this two?

A: No they are not same. Snowflake zero copy cloning is similar to clone operation in traditional warehouses to setup a parallel environment. The cloned object is an independent object that could be used to perform DML operations without impacting the primary object. The beauty of Snowflake architecture is that in the process of cloning, there is no duplication of data involved.

You will have to have an API server /. gateway that can send requests to Snowflake for data. ==> do you guys hv a user guide on how to setup these API server/gateway ?

You can either use API gateways as a service from cloud providers or BYO. Snowflake usage or integration is no different for either. There are some of the reference architectures that you could refer for your use cases. Link: https://www.snowflake.com/developers/