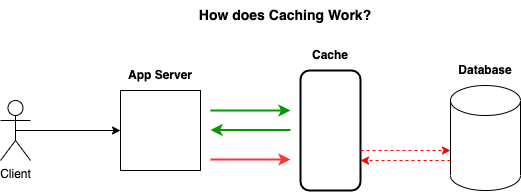

Why Caching?

- Improve performance of application.

- Save money in long term.

Speed and Performance

- Reading from memory is much faster than disk, 50~200x faster.

- Can serve the same amount of traffic with fewer resources. With the rapid performance benefits aforementioned, you can server much more many more requests per second with the same resources.

- Pre-calculate and cache data. This is something Twitter implemented, they occasionally pre-compute what your time-line is, i.e. 200 tweets, they will push these data into cache so that they can serve it very quickly. So if you know what your users is going to need in advance, you can really improve performance that way.

- Most apps have far more reads than writes, perfect for caching. If you think something like Twitter, where a single written tweet into database, it could be read thousands or for bigger account millions of times.

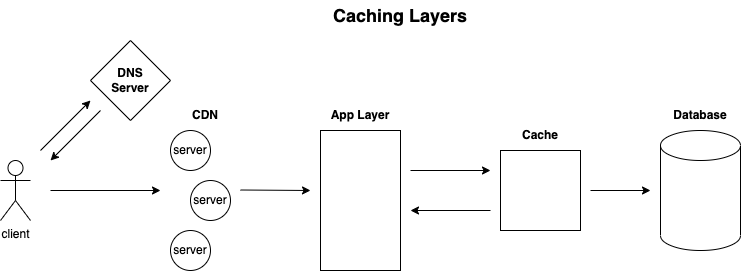

Cache Layers

- DNS

- CND

- Application

- Database

Pseudocode

Retrieval Cache

| |

Writing Cache

| |

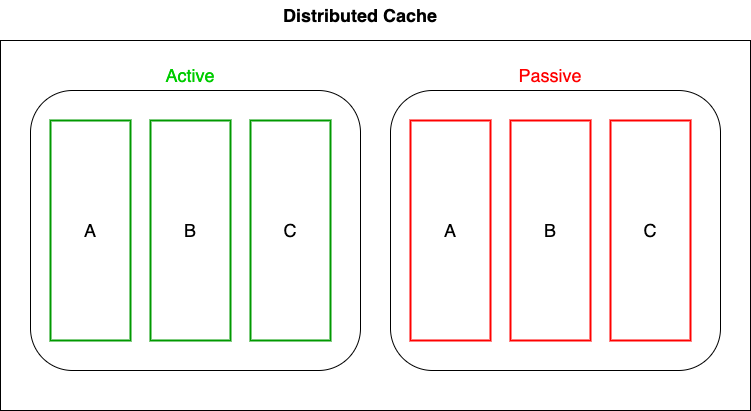

Distributed Cache

- Works same as traditional cache

- Has built-in functionality to replicate data, shard data across servers, and locate proper server for each key.

Cache Eviction

- Preventing stale data

- Caching only most valuable data to save resources

- Time to Leave (TTL).

- Set a time period before a cache entry is deleted.

- Used to prevent stale data.

- In some scenarios that you can cache. For a Twitter account, if you have a tweet with certain amount of likes, it doesn’t need to be immediately updated if there’s a few seconds or minutes delay, where you let that serve stale data and it’s not a huge deal. It’s not like people’s lives depending on the exact accurate count on the number of likes on a tweet.

- In other cases, for example Health Care industry, where it’s somebody’s heartbeat count or their breathing rate, that you probably don’t even want to cache, you want to be constant live data so you don’t have a delay.

- TTL time just depends on what kind of data you’re working with.

Facebook met an interesting problem named Thundering Herd Problem .

Least Recently Used Cache (LRU Cache)

- Once cache is full, remove last accessed key and add new key.

Least Frequently Used Cache (LFU Cache)

- Track number of times key is accessed.

- Drop least used when cache is full.

Caching Strategy

- Cache Aside - most common

- Read Through.

- Write Through.

- This is specifically for write-intensive application.

- To increase the amount of writes the database can handle, the cache is actually updated before the database.

- This does allows to maintain high consistency between your cache and your database.

- But as a result, it adds latency to whenever a user writes and it’s going to slow things down.

- Write Back.

- This is specifically for write-intensive application.

- This is to directly writing data to the cache and it’s super fast.

- A side effect is that if your cache fails before writing into database, you will have an issue of data loss.

- This is risky but if you really need to scale writes for some reason and consistency isn’t essential, that’s one way to do it.

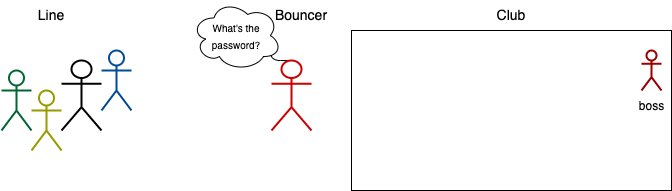

Cache Consistency

- How to maintain consistency between database and cache efficiently.

- Importance depends on use case.

- The biggest thing is that for writes you want to make sure that the user who’s writing data get that fresh data immediately.